HTRC BookNLP Dataset for English-Language Fiction

Work with rich, unrestricted entity, word, and character data extracted from over 200,000 volumes of English-language fiction in the HTDL

Short URL for this page: https://htrc.atlassian.net/l/cp/4W91G6sM

Jump to Section

Attribution

Ryan Dubnicek, Boris Capitanu, Glen Layne-Worthey, Jennifer Christie, John A. Walsh, J. Stephen Downie (2023). The HathiTrust Research Center BookNLP Dataset for English-Language Fiction. HathiTrust Research Center. https://doi.org/10.13012/d4gy-4g41

This derived dataset is released under a Creative Commons Attribution 4.0 International License.

About the Data

BookNLP is a pipeline that combines state-of-the-art tools for a number of routine cultural analytics or NLP tasks, optimized for large volumes of text, including (verbatim from BookNLP’s GitHub documentation):

- Part-of-speech tagging

- Dependency parsing

- Entity recognition

- Character name clustering (e.g., "Tom", "Tom Sawyer", "Mr. Sawyer", "Thomas Sawyer" -> TOM_SAWYER) and coreference resolution

- Quotation speaker identification

- Supersense tagging (e.g., "animal", "artifact", "body", "cognition", etc.)

- Event tagging

- Referential gender inference (TOM_SAWYER -> he/him/his)

In practice, this means that for each book run through the pipeline, a standard (consumptive) BookNLP implementation generates the 6 following files:

- The .tokens file*

- The .book.html file*

- The .quote file*

- The .entities file

- The .supersense file

- The .book file

* Files with an asterisk following them are not part of this dataset due to violating HTRC's non-consumptive use policy. This release includes only the non-consumptive files, files 4 through 6: the entities, supersenses and character data. Read on for more specific information about each of these files.

The volumes this data was generated for are detailed in NovelTM Datasets for English-Language Fiction, 1700-2009 by Underwood, Kimutis, and Witte. In brief, these volumes were identified by supervised machine learning and manually verified training data as being English-language fiction. Full HathiFiles metadata TSV for the volumes in this dataset is available to download via this link.

Entities

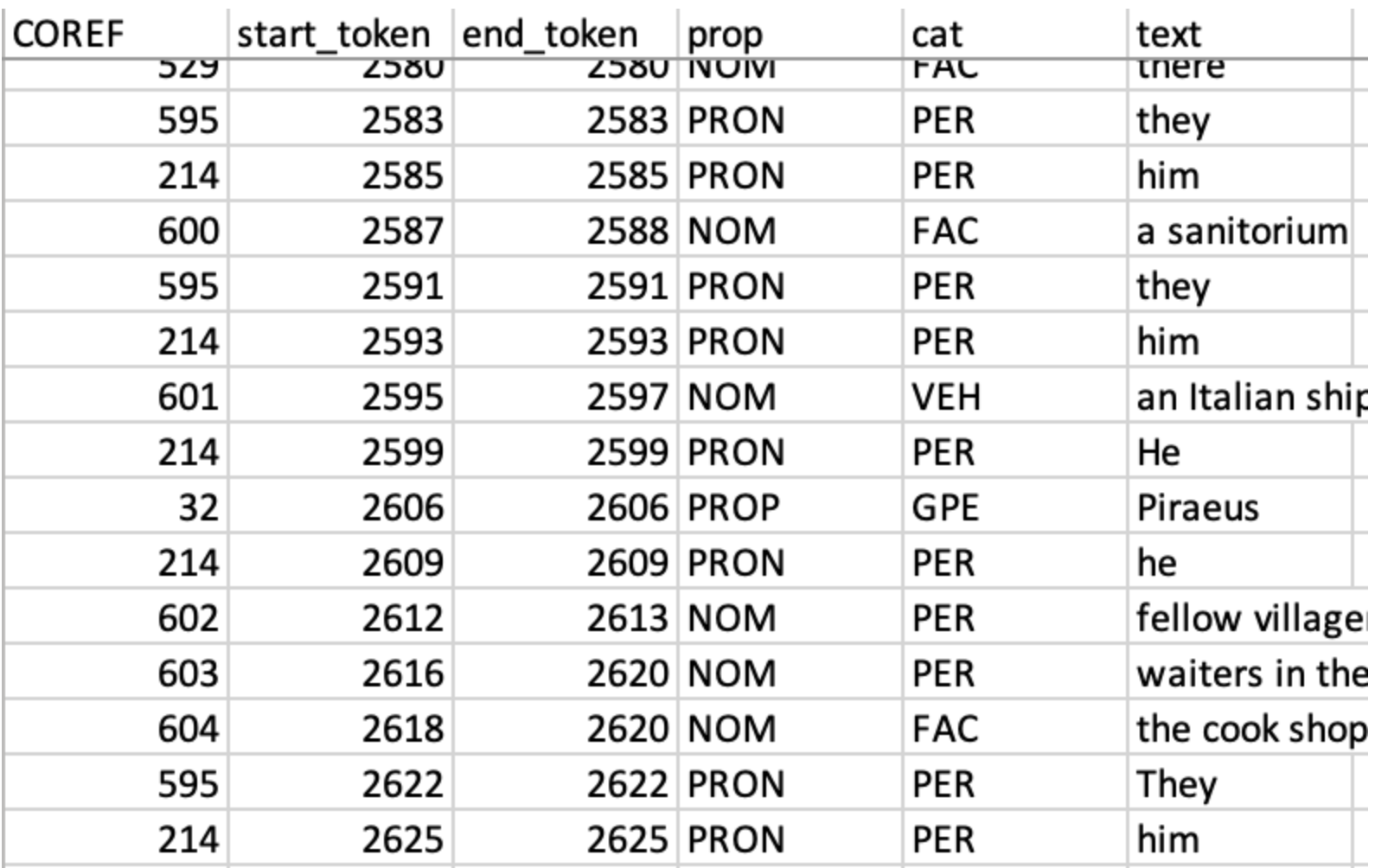

The .entities files contain state-of-the-art tagged entities identified by a predictive model fine-tuned to identify and extract entities from narrative text. The entities are displayed in a TSV with a coreference ID (so that entities referred to by different names can be disambiguated as the same entity), a start and end token for the entity appearance, at the volume level (e.g. “Athens” is tagged with a start and end token of 2814, as it is a unigram). Additionally, the file contains the type of entity and the entity string itself.

Screenshot of example of .entities TSV

Entity tagging in BookNLP is done using a predictive model trained to recognize named entities using an annotated dataset that includes the public domain materials in LitBank plus "a new dataset of ~500 contemporary books, including bestsellers, Pulitzer Prize winners, works by Black authors, global Anglophone books, and genre fiction"

Entities are tagged in the following categories:

- People (PER): e.g., Tom Sawyer, her daughter

- Facilities (FAC): the house, the kitchen

- Geo-political entities (GPE): London, the village

- Locations (LOC): the forest, the river

- Vehicles (VEH): the ship, the car

- Organizations (ORG): the army, the Church

See BookNLP's technical documentation for more information.

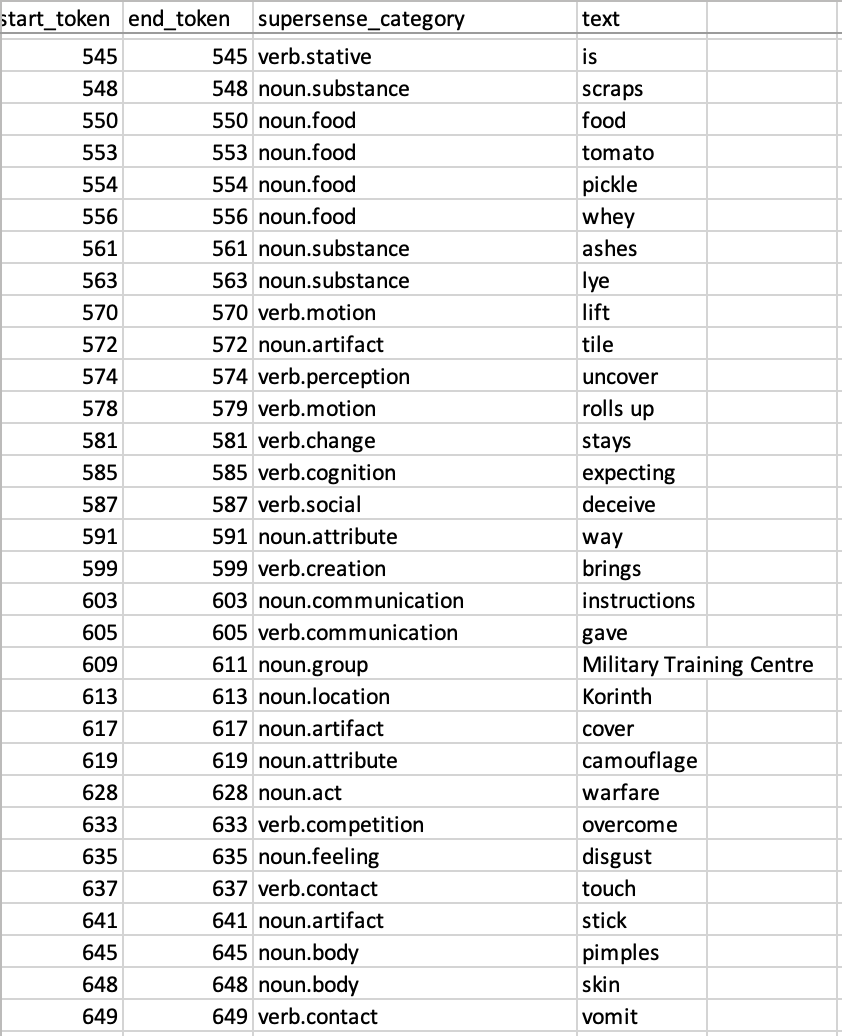

Supersenses

The .supersense file is a TSV that contains tagged tokens and phrases that represent one of 41 lexical categories reflected in the computational linguistics database WordNet. These tags represent fine-grained semantic meaning for each token or phrase within the sentence in which they occur. Along with the tokens/phrases (the supersenses themselves) the file contains the supersense category and its volume-level start and end token.

Screenshot of example .supersense TSV

Supersense data could be used for fine-grained entity analysis, such as an investigation into how authors write social characters (counting and analyzing occurrences of tokens tagged "verb.social"), or which foods are most popular in certain sections of volumes (see how occurrences of "noun.food" cluster within the structure of books in a workset). A complete list of supersense tags is available in WordNet's lexicographical documentation.

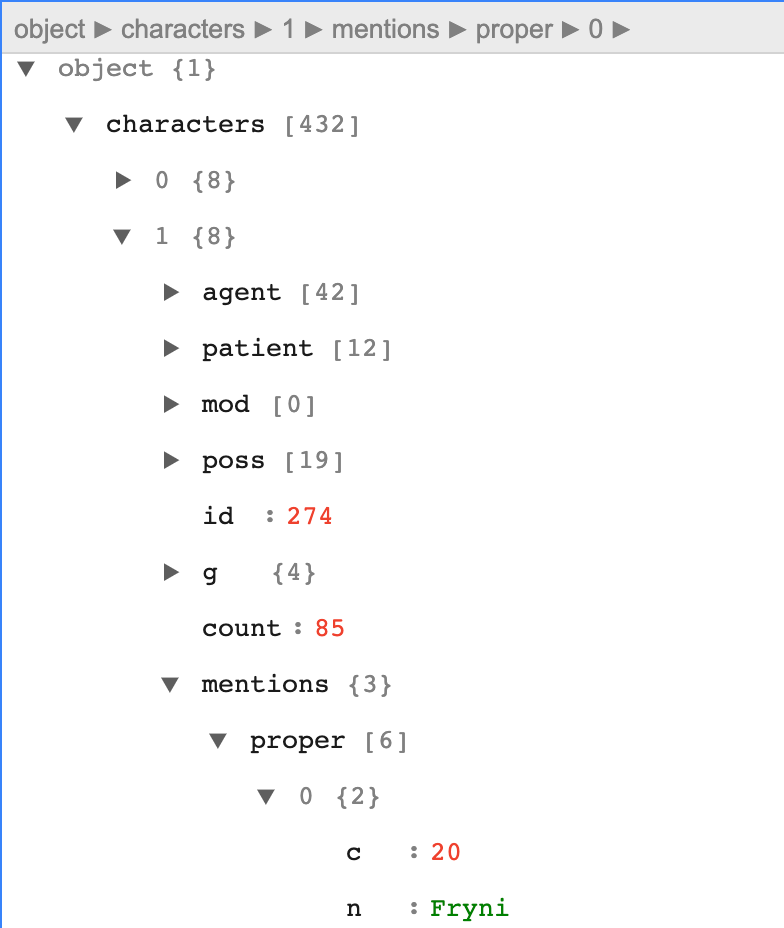

Character data (.book files)

Lastly, the .book file contains a large JSON array with “characters” (fictional agents in the volumes, e.g. "Ebenezer Scrooge") as the main key, and then information about each character mentioned more than once in the text. This data includes these classes of information, by character:

- All of the names with which the character is associated, including pronouns, to disambiguate mentions in the text. From an excerpt like, “I mean that's all I told D.B. about, and he's my brother and all” this means the data for the character “D.B.” will include the words associated with that name explicitly in the text and the pronoun “he” in this sentence. (under the label "mentions" in the JSON)

- Words that are used to describe the character ("mod")

- Nouns the character possesses ("poss")

- Actions the character does (labeled "agent")

- Actions done to the character ("patient")

- An inferred gender label for the character ("g")

Example screenshot of JSON .book files viewed in a web browser with JSON pretty print plugin

This data alone could power inquiry into character descriptions, actions, narrative role, among many possible research questions, without requiring full text of volumes, especially those where accessing the text could violate copyright.

A Note on Gender

Gender inference is a challenging computational task, but also a challenging task even for human readers and scholars. As time has marched on, our understanding of gender has also developed. The male-female gender binary, a relic of the past, is no longer considered suitable for fully reflecting the realities of our society, challenging historical research, especially that done at scale to minimize human intervention. While BookNLP supports many labels in the gender field, the state-of-the-art in gender tagging is rapidly advancing, and the data in this dataset is likely to be considered outdated very soon (if not already) by those who study gender. Please keep this in mind when seeking to use this data.

Getting the Data

Download the data via rsync

The data as described above is available using rsync, a command line utility for transferring large datasets. There are a number of tutorials online for installing and managing rsync, including this particularly helpful example from Hostinger.

Keep in mind that the entire unmodified BookNLP dataset is just under 452 GB, and that rsync downloads files via their name and location. In this case, the data is stored in a flat directory where each volume (represented by a HathiTrust ID, or HTID), has three associated files, one for entities, one for supersenses and one for character data, as so:

mdp.39015058712145.entities

mdp.39015058712145.supersense

mdp.39015058712145.book

To download the files for any one volume, you'll need to issue the rsync command individually for each file or pass a list of filenames to the rsync command in order to download multiple files in one command.

Note: HTIDs correlate directly to filenames for volumes in HathiTrust. However, some of the volumes first ingested into the HathiTrust Digital Library contained characters that cannot be part of filenames. Given this, volumes that include these characters in their HTIDs have substitutions in their filenames. These substitutions will need to be made in your file list before you make the rsync command. You can do so by substitution the following characters:

HTID character | Substitute with |

|---|---|

| : | + |

| / | = |

Example rsync commands:

To download the files to a folder named "booknlp-data" please see the following example commands:

Rsync one file:

rsync data.analytics.hathitrust.org::booknlp/mdp.39015058712145/mdp.39015058712145.entities /Desktop/booknlp-data .

Note: make sure you first create the folder to which you'll rsync the data, here named booknlp-data.

Rsync all files:

rsync data.analytics.hathitrust.org::booknlp/ /Desktop/booknlp-data .

Rsync all files from a list (files-i-want.txt):

rsync --files-from=files-i-want.txt data.analytics.hathitrust.org::booknlp/ /Desktop/booknlp-data .

In addition to the above command syntax, to download only data for certain volumes, you'll first need a list of HathiTrust identifiers (HTIDs) for the volumes you'd like data for. Once you have a list of IDs, you can use it to create a list of files simply by appending the three file extension types (e.g. '.entities' or '.supersense) to the end of the ID. Here is a Python function for converting a list of HTIDs to a list of HTRC ELF BookNLP Dataset filepaths:

def get_htrc_booknlp_files(htids: list, files_to_download: list):

files_i_want = []

for id in htids:

id = str(id)

if '.supersense' in files_to_download:

ss = id + '.supersense'

files_i_want.append(ss)

if '.entities' in files_to_download:

e = id + '.entities'

files_i_want.append(e)

if '.book' in files_to_download:

b = id +'.book'

files_i_want.append(b)

# the following function substitutes characters that cannot be in filenames that occur in some HTIDs

def ark_fix(htid: str):

clean_htid = htid.replace(':', '+')

clean_htid = clean_htid.replace('/','=')

return clean_htid

with open('files_i_want.txt', 'w') as f:

for i in files_i_want:

i = ark_fix(i)

f.write(i+'\n')

return files_i_want

You can use the above function to generate the needed file, files-i-want.txt, with the following Python code:

# Example for a small set of volumes:

htids = ['uc2.ark:/13960/fk6057d64t','mdp.39015008410345']

desired_files = ['.book','.supersense','.entities']

files_to_rsync = get_htrc_booknlp_files(htids, desired_files)

# Example for a large list of volumes from an outside file, htids_i_want.txt:

htids = []

with open('htids_i_want.txt', 'r') as f:

lines = f.readlines()

for htid in lines:

print(htid)

htids.append(htid)

desired_files = ['.book','.supersense','.entities']

files_to_rsync = get_htrc_booknlp_files(htids, desired_files)

If you need assistance doing this programmatically, please get in touch with HTRC at htrc-help@hathitrust.org! We have expertise in assembling worksets of volumes relevant to specific research questions and may even already have code or resources available to help with these tasks.

Rsync a file (file_listing.txt) containing a list of all files available to download:

rsync data.analytics.hathitrust.org::booknlp/file_listing.txt /Desktop/booknlp-data .

Rsync a file (hathifiles_booknlp.tsv) containing bibliographic metadata (sourced from the HathiFiles) for all volumes included in the dataset:

rsync data.analytics.hathitrust.org::booknlp/hathifiles_booknlp.tsv /Desktop/booknlp-data

Accessing the Dataset from an HTRC Data Capsule

In addition to the public publishing of this BookNLP dataset as described above, HTRC also plans to make the entire dataset, including the three files removed from the public dataset, available to researchers within the HTRC Data Capsule. This is planned to be completed in 2023, so check back with this page periodically for more information.

External Resources

In addition to this webpage and reaching out to HTRC staff (via htrc-help@hathitrust.org) there are external resources that might be of use to anyone seeking more information or guides to using the data in this dataset:

- The BookNLP GitHub repository: https://github.com/booknlp/booknlp

- Dr. William Mattingly's excellent tutorial on BookNLP: https://booknlp.pythonhumanities.com/intro.html

- Dr. Mattingly's corresponding series of video tutorials on using BookNLP in Python: https://www.youtube.com/playlist?list=PL2VXyKi-KpYu3WJKc1c94OSUF5pujrygD

- Let us know if you find others that are useful!